ETL with AWS RDS & PostgreSQL Engine (Tutorial)

Overview

Building a customized database from 95 different data sources across various spatiotemporal units requires an efficient way to integrate the data into the final destination.

Therefore, ETL is a type of data integration process referring to three distinct but interrelated steps (Extract, Transform and Load) and is used to synthesize data from multiple sources many times to build a Data Warehouse.

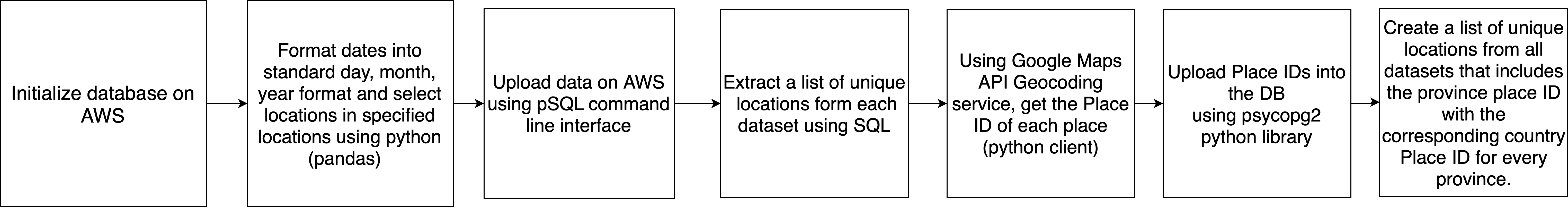

ETL Pipeline

1. Extract

1. Extract

Data pipelines were created on raw data in order to host it on a relational database server.

2. Transform

After extraction, the data was labeled using the date and location codes. These labels are essential for merging the datasets together. Location

A new variable (location_id) is created to store a unified unique identification (according to the Google Place IDs encoding system) for the location variables (listed below). The codes in this variable were encoding using the Google Geocoding API and Geolocation API.

An example of appending date ID:

from dateformat import DateFormat

date_format = DateFormat("YYYYMMDD")

def getformat(row):

if output not in dates['date_ID']:

dates['date_ID'].insert(output)

dates['format'].insert('YYYYMMDD')

xSub['date_ID'] = xSub['DATE']

xSub['date_ID'].apply(getformat,axis=1)

The dates are also labeled using 3 new variables: day_ID (DD format), month_ID (MM format), and year_ID (YYYY format) in order to ensure the date format was unified across different datasets. Below are the variables that were used to label dates:

3. Load

After transformation, the data is loaded into PostgreSQL relational database as follows:

Create the tables on pgAdmin 4 using SQL code generated from CSVKit csvsql command

Load the files into their respective tables using PSQL COPY command

Connecting to RDS through PostgreSQL?

1. psql (on Terminal)

Initialization:

- install brew if you don’t have it

/bin/bash -c "$(curl -fsSL [https://raw.githubusercontent.com/Homebrew/install/master/install.sh](https://raw.githubusercontent.com/Homebrew/install/master/install.sh))"

- install psql

brew doctor

brew update

brew install libpq

- link the psql command

brew link --force libpq

Connection:

- type the following and then enter password (provided at the top section )

psql -h project0.cauhw7rsy7uq.us-west-1.rds.amazonaws.com -p 5432 -U postgres -W -d caesar

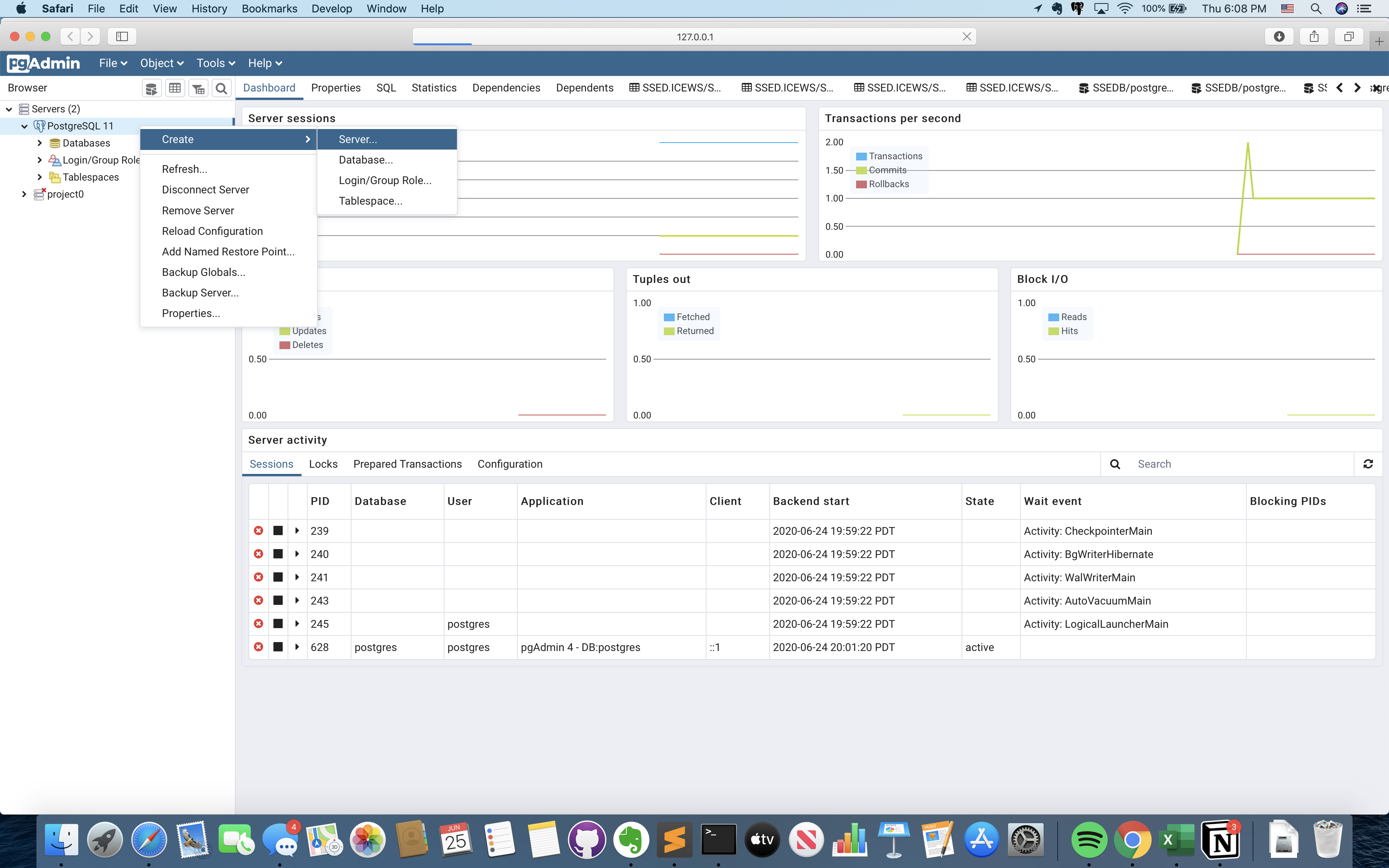

2. pgadmin 4

Initialization:

- Download and install: https://www.pgadmin.org/download/pgadmin-4-macos/

- Right click on postgreSQL 11 and choose create → server

3. Python

import psycopg2

import sys

import boto3

ENDPOINT="xxxx"

PORT="xxxx"

USR="postgres"

REGION="us-west-1"

DBNAME="postgres"

#gets the credentials from .aws/credentials

session = boto3.Session(profile_name='default')

client = boto3.client('rds')

#token = client.generate_db_auth_token(DBHostname=ENDPOINT, Port=PORT, DBUsername=USR, Region=REGION)

token = 'Graywater802$'

try:

conn = psycopg2.connect(host=ENDPOINT, port=PORT, database=DBNAME, user=USR, password=token)

cur = conn.cursor()

cur.execute("""SELECT now()""")

query_results = cur.fetchall()

print(query_results)

except Exception as e:

print("Database connection failed due to {}".format(e))